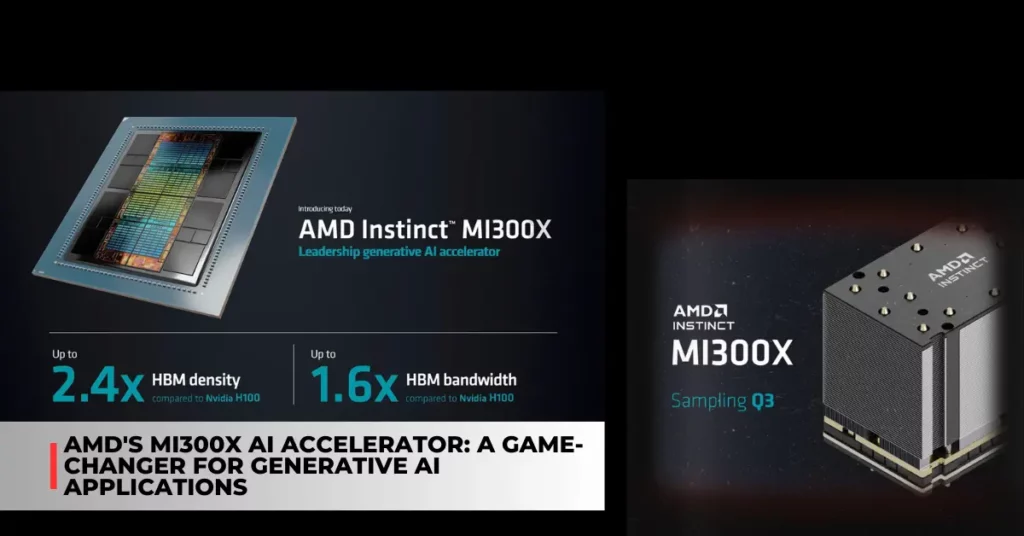

AMD has recently unveiled its new AI datacenter accelerator, the MI300X, which is designed to handle the most demanding generative AI workloads, such as large language models (LLMs).

The MI300X is based on the same platform as the MI300A, which combines CPU and GPU chiplets in a single package but replaces the CPU chiplets with two additional GPU chiplets for more computing power.

The MI300X boasts 153 billion transistors, 192 GB of HBM3 memory, and 5.2 TB/s of memory bandwidth, making it the most powerful AI accelerator in the market.

Contents

What is Generative AI and Why is It Important?

Generative AI is a branch of artificial intelligence that focuses on creating new content or data from existing data, such as images, text, audio, or video. Generative AI can be used for various applications, such as content creation, data augmentation, image synthesis, text generation, speech synthesis, and more.

One of the most popular and challenging generative AI tasks is training and running large language models, which are neural networks that can learn from and generate natural language.

LLMs can be used for natural language understanding, natural language generation, question answering, summarization, translation, and more. However, LLMs require a lot of compute power and memory, as they are composed of billions of parameters that need to be processed and stored.

Antonio Linares shared a post on Twitter:

15/14: proof that this is happening 👇$AMD ´s new MI300X AI Accelerator chip has 192GB of memory.

“With all of that additional memory capacity, we actually have an advantage for large language models because we can run larger models directly in memory” – Lisa Su, $AMD CEO. pic.twitter.com/Jhn63gtok7

— Antonio Linares (@alc2022) June 21, 2023

How Does the MI300X Excel at Generative AI?

The MI300X is the first AI accelerator that can run a 40-billion parameter LLM, such as Falcon 40-B, entirely in memory, without having to move data back and forth from external memory. This reduces the latency and improves the performance and efficiency of the LLM.

The MI300X can also scale up to eight accelerators in a single package, enabling it to handle even larger LLMs of up to 80 billion parameters. The MI300X is based on the CDNA 3 architecture, which is AMD’s third-generation compute-optimized GPU architecture, designed specifically for AI and HPC workloads.

The MI300X features eight GPU chiplets, each with 38 compute units (CUs), for a total of 304 CUs. The GPU chiplets are connected by an I/O die, which provides high-speed links to the HBM3 memory and the Infinity Fabric interconnect.

The Infinity Fabric allows the MI300X to communicate with other accelerators, CPUs, and other devices with low latency and high bandwidth. The MI300X also supports various AI frameworks and libraries, such as TensorFlow, PyTorch, ROCm, and MIOpen, making it easy for developers and researchers to leverage the power of the MI300X for their generative AI projects.

For additional recent articles, please follow the link provided below:

- AMD Zen 4c Cores for Ryzen Laptops: The Future of Laptop Computing

- AMD Launches Radeon RX 7900M Laptop GPU With the New Alienware M18

How Does the MI300X Compare to the Competition?

The MI300X is AMD’s answer to Nvidia’s Hopper GPUs, which are also designed for AI and HPC workloads. The Hopper GPUs are expected to feature the GA200 GPU, which is rumoured to have 256 CUs and 128 GB of HBM3 memory.

The Hopper GPUs are also expected to support the NVLink interconnect, which allows multiple GPUs to work together as a single logical device.

According to AMD, the MI300X offers 2.4 times the memory density and 1.6 times the memory bandwidth of the Nvidia H100 Hopper GPU, which is the flagship model of the Hopper family.

The MI300X also claims to have higher performance and efficiency than the H100, especially for generative AI workloads. However, the MI300X also has a higher power consumption than the H100, as it is rated for 750 W, compared to the H100’s 600 W.

This means that the MI300X will require more cooling and power delivery solutions, which could increase the cost and complexity of the MI300X deployment.

Conclusion

The MI300X is AMD’s latest and greatest AI datacenter accelerator, which is tailored for generative AI workloads, such as large language models. The MI300X features a massive amount of computing power and memory, which allows it to run LLMs entirely in memory and scale up to eight accelerators in a single package.

The MI300X also supports various AI frameworks and libraries, making it easy for developers and researchers to use the MI300X for their generative AI projects.

The MI300X is AMD’s challenge to Nvidia’s dominance in the AI and HPC market, as it claims to have superior performance and efficiency than Nvidia’s Hopper GPUs, especially for generative AI workloads.

However, the MI300X also has a higher power consumption than the Hopper GPUs, which could limit its adoption and deployment. The MI300X is expected to be available soon and will be used by Microsoft Azure for its AI-optimized virtual machines.

The MI300X will also be used by AMD and Microsoft for their AI PC initiative, which aims to bring AI capabilities to the desktop and laptop market. The MI300X is a generative AI powerhouse that could change the landscape of AI computing.